Eliciting Expert Judgments

In an ideal world, uncertainties are reduced quickly and efficiently with research, monitoring, modeling or other analysis, and information is provided in time to aid decision making. However, it is not always possible to conduct new research, and even when it is, such research typically reduces but rarely eliminates uncertainty. In these kinds of cases, the best way forward might be to use structured expert judgments. There is well-established literature on the methods that are required for eliciting defensible and transparent judgments in the face of significant uncertainty and on the opportunities and limitations for using such judgments as aids to improved management.

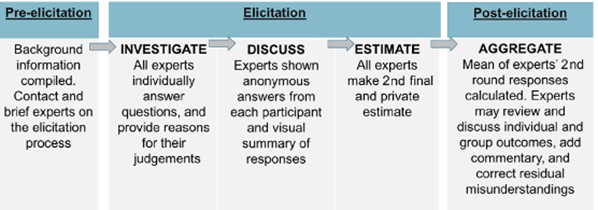

The IDEA protocol[1] is widely regarded as best practice. It involves three stages: Pre-elicitation, Elicitation, and Post-elicitation:

Commonly cited elements of good practice include [2]:

- Identify multiple experts based on an explicit selection process and criteria, and include experts from different domains and disciplines of knowledge (e.g., scientific, Indigenous and local knowledge).

- Clearly define the question for which a judgment will be elicited, making sure that the question separates, as much as possible, technical judgments from value judgments.

- Decompose complex judgments into simpler ones. This will improve both the quality of the judgment and, to the extent it helps to separate a specific technical judgment from the management outcomes of that judgment, its objectivity.

- Document the expert’s conceptual model. Not only will this help the quality of the judgment and its communication to others, but it will create a clear and traceable account that will facilitate future peer review.

- Use structured elicitation methods to guard against common cognitive biases that have been shown to consistently reduce the quality of judgments [3].

- Express judgments quantitatively where possible. The use and interpretation of qualitative descriptions of magnitude, probability or frequency vary tremendously among individuals.

- Characterize uncertainty in the judgment explicitly, using quantitative expressions of uncertainty wherever possible to avoid ambiguity.

- Document conditionalizing assumptions. Differences in judgments are often explained by differences in the underlying assumptions or conditions for which a judgment is valid.

- Explore competing judgments collaboratively, through workshops involving local and scientific experts, with an emphasis on collaborative learning.

Choose methods of aggregation carefully; while mean values across experts may be a good approach in some cases, in others it may be more important to understand the differences in values (as a guide to adaptive management for example) than it is to settle on a single value.

[1] Hemming et al, 2017

[2] Gregory et al, 2012

[3] Morgan and Henrion, 1990